Manual Therapy Online - Articles

Clinical Reasoning; Methods and Tools

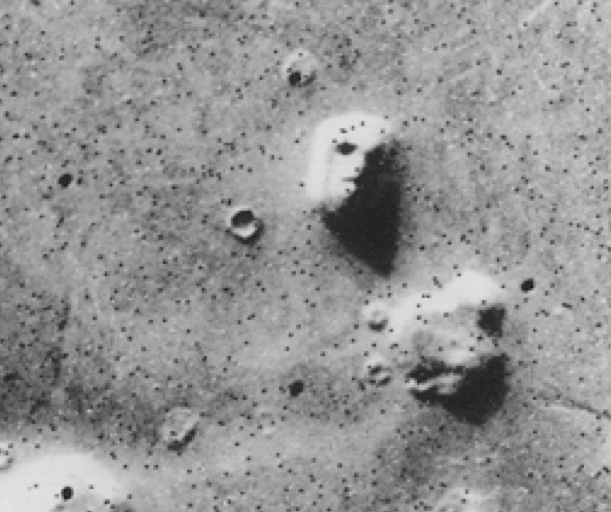

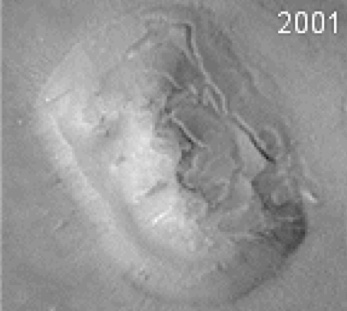

Most everyday problems are solved without given much thought to how they are solved. Many are solved by pattern recognition, in effect the person involved in solving the problem is an expert, having come across the problem on such a frequent basis that sometimes it doesn’t even seem like a problem any longer. Humans are pattern-recognizing beings, so much so that we see patterns and form conclusion where none exist and none are warranted. For example the “face on Mars” is easily recognized as a face but many people read more into than that, alien artwork is oftened cited as a cause while the more mundane but more highly probabilistic reason that is a simple geological feature is often written of as impossible.

Even after the most recent NASA photographs which show it as nothing more than a mesa, people still will not accept the facts of the matter.

This is an oldie but a goodie, read the following sentence:

My sleplcehcker gvae me ntohnig but gerif wihle I worte tihs snentec but it nedeent hvae wrroid bcaeuse you can raed it wthuot too mcuh torulbe.

The understanding of this sentence is due entirely to pattern recognition. But if the pattern is too messed up then difficulties arise.

yM plselhceker vgea em tonghti tbu regfi hweli I trowe sith tensetnce utb ti deenten vaeh dowrri ...

This second messed up sentence is the beginning of the first, which I’m sure you figured out based on context but it is unlikely that you managed to read it very easily.

Another problem that is solved very early in your life is how friendly or otherwise do you get to somebody the first time that you meet them? You are forced to form a first impression and you do it immediately and unconsciously in that you don’t consciously weigh up their body language, their vocabulary, accent, topics of conversation etc but rather you take in the entire package to create that snap judgment. You are an expert in this and it is unusual for the first impression to be wrong in any profound manner. There are experts in clinical reasoning and they are able to do the same providing the problem falls within their field of expertise. They have learned over the years a lot about a small number of things and have learned how to recognize both the common and uncommon variants of these things very quickly and with a minimum amount of information. But it takes to time for an expert to form and patients cannot wait so for the rest of us a more formal approach must exist. This chapter will be about the most traditional methods of clinical reasoning, hypothetic-deduction and pattern recognition.

Hypothetic-Deductive Reasoning

Is the method of critical reasoning when the reasoned is not an expert and is used extensively in research (by definition the researcher cannot be an expert in something that is unknown) and by non-expert clinicians.

The following algorithm taken from Godfrey-Smith’s book (*Peter Godfrey-Smith (2003) Theory and Reality, p. 236.) probably best encapsulates hyporthetic-deductive reasoning (HDR):

- Gather data (observations about something that is unknown, unexplained, or new)

- Hypothesize an explanation for those observations.

- Deduce a consequence of that explanation (a prediction). Formulate an experiment to see if the predicted consequence is observed.

- Wait for corroboration. If there is corroboration, go to step 3. If not, the hypothesis is falsified. Go to step 2.

The following scenario, from iSTAR Assessment (*http://www.istarassessment.org/srdims/hypothetical-deductive-reasoning-needs-pictures/) describes how this system can be used with a simple experiment.

A student put a drop of blood on a microscope slide and then looked at the blood under a microscope. The magnified red blood cells look like little round balls. After adding a few drops of salt water to the drop of blood, the student noticed that the cells appeared to become smaller.

This observation raises an interesting question: Why do the red blood cells appear smaller? Here are two possible explanations:

- Salt ions (Na+ and Cl-) push on the cell membranes and make the cells appear smaller.

- Water molecules are attracted to the salt ions so the water molecules move out of the cells and leave the cells smaller.

To test these explanations, the student used some salt water, a very accurate weighing device, and some water-filled plastic bags, and assumed the plastic behaves just like red-blood-cell membranes. The experiment involved carefully weighing a water-filled bag in a salt solution for ten minutes and then reweighing the bag.

What result of the experiment would best show that explanation I is probably wrong?

- the bag loses weight

- the bag weighs the same

- the bag appears smaller

Answer: A

What result of the experiment would best show that explanation II is probably wrong?

- the bag loses weight

- the bag weighs the same

- the bag appears smaller

Answer: B

The student observes the effect of adding salt water to the blood sample, in real life this wouldn’t be directed by the instructor but would be a formal observation or an accidental one. In either case the observation makes the student wonder why this occurred and two possibilities offer themselves from knowledge previously accumulated by the student, either the force of the salt crushes the blood cells (H1) or the high salinity causes water to be drawn from the cells (H2). Both have the same observable effect. To find out which, the student designs an experiment and predicts the results for each possibility. If the cell when weighed loses weight then water or some other substance is leaving the cell and H2 is proved but if there is no change then H2 cannot be true and H1 triumps. Of course if the weight of the cells increase then an alternative explanation must be true and likely the students pre-existing knowledge is inadequate.

To put it into clinical terms:

For this discussion, the clinician makes observations, takes a history, carries out an objective examination and reviews lab and imaging studies to generate an explanation that will, hopefully explain all of the “facts”, determine an experiment (the treatment) and predict the outcome of the treatment (the prognosis). In case you are wondering I put the word “facts” in parenthesis because they may actually not be, cognitive error and bias interfere with the reasoning process as often as not and what may be a fact to the person doing the examination may be different to the “fact” of an independent observer.

This is a tried and trusted procedure first formally annunciated and named by Whewell in 1837 (* William Whewell (1837) History of the Inductive Sciences) and what should be apparent from the above descriptions and examples is that the word “hypothetical” and “hypothesis” are being used in their scientific sense and not in their lay sense which means an idea. A scientific hypothesis is one which is both testable and disprovable but its formal form is usually brought about by speculation following an observation or even a thought experiment that is based on previously held knowledge. It is also worth mentioning that “test” does not always mean a formal experiment in the way physical therapy researchers often would like it meant with random selection of subjects and the stabilization of all variables except the one being manipulated. Apart from the many other methods of experimentation the clinician’s experiment with hypothesis-based treatment is equally valid although more vulnerable to statistical and methodological nit-picking. In any event it is the only intervention allowed to the clinician to see if the hypothesis’ prediction comes to fruition.

So to put the algorithm into more relevant (for this discussion anyway) clinical terms:

It can be seen that at the very least the clinician has two absolute requirements for this method to work; accurate facts and accurate pre-existing knowledge. If either of these are absent then errors must and will happen at all times except when there is a happy juncture of ignorance and blind luck. The knowledge requirement is in the first instance the responsibility of the student’s and novice clinician’s teachers, the tip on the acronym GIGO has never been more pointed. It doesn’t matter how intelligent and logical the clinician may be poor information will lead to mistakes in diagnosis and treatment although the more intelligent and independently minded the clinician the quicker will the realization come that his/her teachers sold her/him a bucket of garbage.

The second requirement accurate facts is the responsibility of the person relating the facts and the people gathering them. If the patient is lying or unintentionally giving a false account of the condition then the clinician may have no way of knowing this (although with experience falsehoods are frequently apparent). But cognitive error and bias can mislead the clinician into hearing or seeing things that are actually not there or interpreting them in an inappropriate manner, failing to give them due importance or giving them too much significance. So another acronym could be VIGO, value in garbage out. More on this later.

However, there is another problem associated with HDR that is not the fault of the method at all but rather the way our students are usually asked to carry it out. The idea that all information is critical is on its face patently silly and that you need as much information as you can gather before making a decision or coming to a solution is just as silly although less obviously so. If the words were inserted into the sentence “do not make a judgment until all information is in and understood” so that it read “do not make a judgment until all information that is relevant to the immediate problem is in and understood” in and understood” we would have a better shot at the solution as we wouldn’t be overwhelmed by the mass of information that a patient can deliver during the objective and subjective examinations most of which is completely irrelevant to the issue at hand. This is the method of the expert and script focused deduction (SFD) attempts to combine the expert’s pattern recognition with HDR to make things clearer for the non-expert.

Pattern Recognition

An Expert

There are various definitions of the expert:

- Somebody who has more slides than you.

- Somebody who travels more to teach than you.

- Somebody who knows more and more about less and less.

- An expert is a man who has made all the mistakes which can be made, in a narrow field.

- Niels Bohr - An expert is someone who has succeeded in making decisions and judgment simpler through knowing what to pay attention to and what to ignore.

- Edward de Bono - In the beginner’s mind there are many possibilities, in the experts mind there are few.

- Shunryu Suzuki

Failure plays, in my opinion, an extremely important component in becoming an expert providing you honestly reflect on the failure and make a smaller mistake the next time. Alex Fergusson the past manager of Manchester United said that you learn from failure not from success.

If you’re wondering why the patter on experts it’s because pattern recognition is the method of the expert. In everyday life we are all experts at most aspects of getting by. We can recognize mood through body language, intonation and movement without the subject having to tell us that he/she is upset and we are good enough that we can recognize when the other person is trying to cover up the emotion. Babies use pattern recognition to recognize individuals, talk, walk and use body language so well that they train adults in a very short space of time. The ability to recognize patterns seems to be hard-wired into the human brain, so much so that we see patterns where none exist, but the void must be filled. For those of you who, like me, enjoy obscure technical phrases the seeing of patterns where none exist such as the face of a man in the moon, cloud shapes or the constellations of stars is called “apophenia” and this with the perception of patterns in random data (paraidolia) leads to misinterpretation of statistics, reckless gambling, conspiracy theories and religious experiences. They are all forms of cognitive error.

There are any number of examples of pattern recognition or thin slicing as it is becoming to be known and not a few experiments have been done to demonstrate its power and utility. In one famous case a 2500 year old kouros (a particular type of Greek statue) was purchased in 1983 for 10 million dollars by a Californian museum based on authenticated documentation, comparisons with other similar statues, analysis of the marble, stereomicroscopy, mass spectrometry and countless other spectometries, and diffraction X-rays. After purchasing it the museum asked numerous experts in the field to give and opinion and almost unanimously and independently agreed that the statue was wrong and in most cases made the decision as soon as they saw the statue but often couldn’t say why they felt that way. Because of these opinions the museum dug deeper into the documentary authentication and much of it turned out to be fake and on further investigation the styling of the kouros was wrong. In addition it was felt that the aging of the statue was not natural but due to the application of potato mold. In short the opinion given by experts often within a couple of seconds of seeing the statue was likely correct while the detailed technical opinion over months was probably wrong (*Gladwell, M. Blink: the power of thinking without thinking. Pages 3-8. Back Bay Books NY, 2005. )

In an experiment that essentially compared hypothetic-deduction with pattern recognition they investigators asked ordinary people and professional gamblers to assess a card game not known to them and develop a winning strategy. What they found was that the non-gamblers took about 50 cards before developing a strategy but it was not until the80th card that most consciously knew what was happening. starting to get an idea of how to win. The gamblers on the other hand developed the strategy by the tenth card but more than that, based on sweat detectors the professionals had physiological reactions at the same time as they found the solution but they didn’t realize that they had cracked it until about the 40th card. (*Bechara, A. et al. Deciding Advantageously Before Knowing the Advantageous Strategy. Sci 275 1293-1295 Feb 1997.).

Students were given three 10 second video clips without sound of a teacher and asked them to evaluate the teacher’s effectiveness. The clips were cut back to 5 seconds and the 2 seconds without changing the ratings. When these ratings were compared with those of student evaluations after a full semester with the teacher they were extremely similar (*Ambaldy, N. Rosenthal, R. Half a minute predicting teacher evaluations from thin slices of nonverbal behavior and physical attractivenesss. J Personality Soc Psychol 64(3) 431-441 1993).

Another study (*Gosling, S. et al. A room with a cue: personality judgments based on offices and bedrooms. J Personality Soc Psychol 82(3) 379-198, 2002) 80 people were asked to evaluate their good friends on extraversion, agreeableness, conscientiousness, openness to novel experiences and emotional stability and their ratings compared with those same people being rated by total strangers whose only contact with them was to spend 15 minutes looking around their dorm room. The strangers compared more closely with the investigator’s personality profile than did the friends in rating conscientiousness, openness to novel experiences and emotional stability and almost as well in estimating agreeableness. In this set of criteria which are used routinely in personality profiles the strangers usining only the room as a guide and no contact at all with the subject did better then the friends. What the strangers seem to have done was be able to make decisions based on minimal information that was better than good friends could make possibly because they were not overwhelmed by the sheer amount information in a person’s personality.

Increasing the amount of information does not seem to make the solution any more accurate. A group of psychologists were given a case study in fragments. First they were given a minimal amount of information and asked to do two things, make a diagnosis and state the level of confidence they had in that diagnosis. Then they were given more information which they could integrate into the previous after reviewing what they already knew. They were told that they could keep or change their previous diagnosis but again they had to state their confidence level. This continued until they received all of the information that was available and they given one last chance to review the case and change the diagnosis and state their confidence. What happened was that the with each new influx of information the accuracy of the diagnosis did not improve but their confidence level increased at every new input. Their confidence over-reached their accuracy. (*Oskamp, S. Overconfidence in case study judgments. J Consult Psychol 29(3) 1965)

So experts are able to come to accurate decisions more often than not not simply because they can identify the critical identifiers but because they ignore information that is not immediately relevant. The Goldman Triage Algorithm used a computer program to find out which characteristics are the minimum required to sort out serious caused of chest pain from the less urgent and a mathematical heuristic to rate the interplay of these identifiers to produce a set of questions and tests that would make an expert triagist out of a non-expert by reducing the amount of information necessary to solving the immediate problems. Long term management is another matter, now all of the questions regarding predispositions such as family history, diabetes, stress management ability etc. are all essential at countering the disease, but are only confounding issues in the diagnosis.

Section Summary

Students and non-expert clinicians have been taught that they should gather as much information as possible before coming to a conclusion, experts on the other hand are able to make the same decision with a minimum of information and further would likely become confused if more information was taken in. The literature on cognition suggests that for complex problem solving where there is some much detail available, pattern recognition is as accurate and often more accurate as hypothetic-deductive reasoning. What we do with students is basically tell them to do exactly the opposite of what an expert does and no guidance on how to become an expert except to say it takes time. Certainly after more than a couple of decades of teaching I have seen very little evidence that this transformation takes place with the majority of my students nor the students I see from other teachers even years after they start post-graduate training in manual therapy. And why should it? We tell them not to trust their instincts, not to think about the solution until all of the information is in so that it can be analyzed and integrated to be the basis of an informed decision. On its face this seems the right thing but, counter to what “common sense” tells us, it is exactly the wrong thing to do and we establish a culture of anal retentiveness when it comes to information gathering and detail and mistrust in our finely honed ability to recognize patterns from the morass of data around us. It is only a few who are self-confident, independently minded and critical of their results that move on from hypothetic-deduction to pattern recognition. On the other hand the students and novices are not experts and haven’t had the opportunity to gain the experience necessary to become an expert in their field but there should be a method of allowing them to use their pattern recognition as it develops and trust in their ability to use it accurately. The next section in this series will offer a solution to this dilemma, Script Focused Deduction.

comments powered by DisqusFeatured Course

FREE COURSE

Foundations of Expertise

Date:

Starting March 24, 2024

Location: Online

LEARN MORE